nigiri

12 / 2024

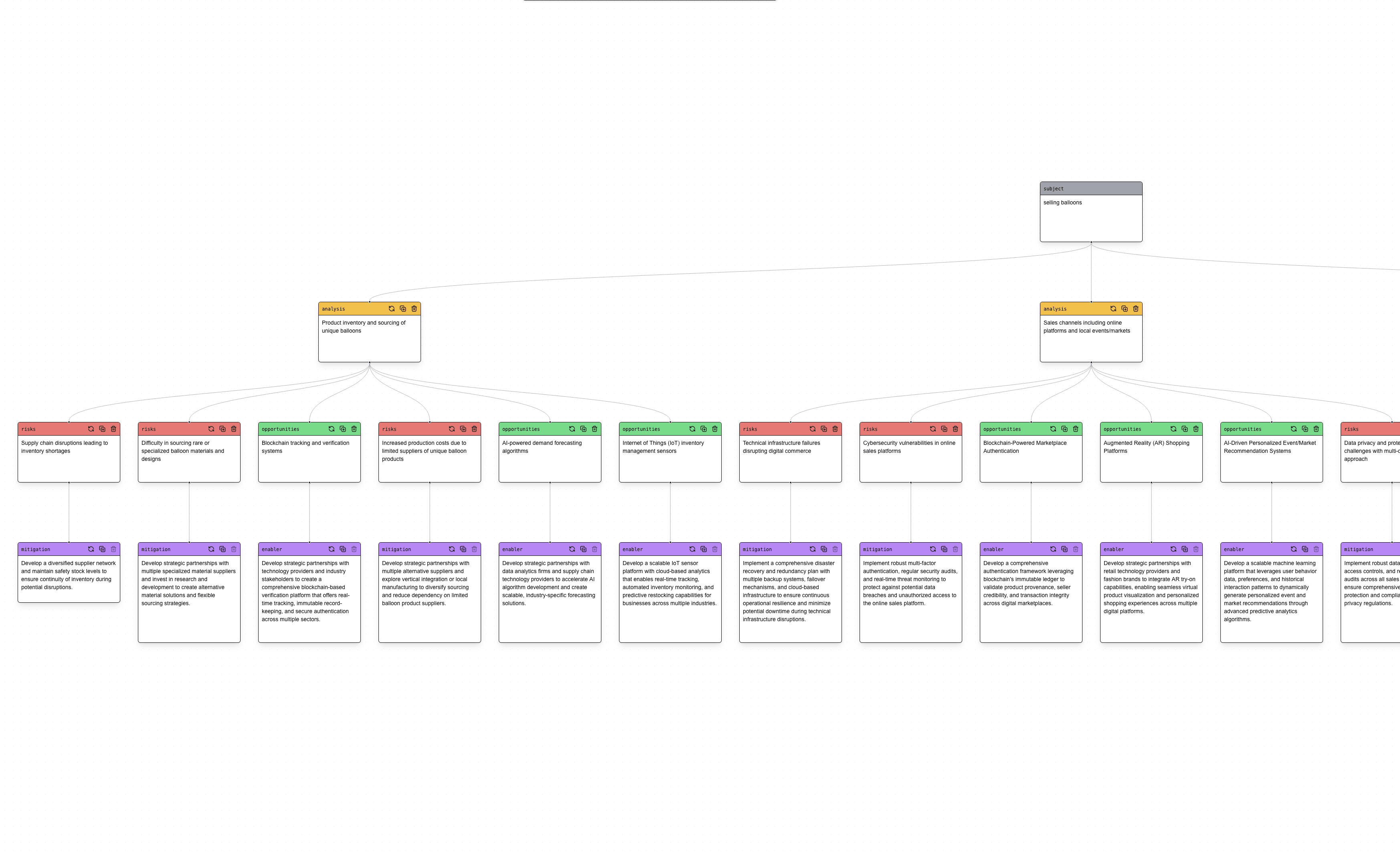

'Nigiri' explores automated LLM reasoning similar to 'chain-of-thought' in a datatree structure. Prompts are organized as chains inside a hierarchical system where each node represents text data. This data could be manually written or user generated. Users can modify any of the blocks, and the system automatically regenerates the following nodes with the new context. This follows the idea of fast-forward generation with human / manual editing and refinement, greatly accelerating thought processes.

The data is structured in a tree-like structure. This allows thoughts to depend on each other and exist next to each other. They are organized on a 2-dimensional whiteboard, which makes grasping all the information a lot easier.

Nigiri allows to create and process chains of thoughts in a tree structure. Each node can be manually edited and the system will automatically regenerate the following nodes with the new context.